Whilst most application development environments utilise local databases running on individual developer machines, some projects such as ETL and data warehousing may require to work with shared databases where the databases run on a shared server and used on by many developers working on multiple projects.

In these circumstances the developers have to work very carefully with close co-ordination to ensure that they don't inadvertently undo each others work. If one developer has to work in isolation often they may make a temporary copy of the database on the shared server and work on that one separately. This can lead to a lot of unwanted branch databases lying around the server which are highly out of sync with the trunk version.

Now that Microsoft has made the docker images available for SQL Server 2016 and 2017 developer edition, there is a much better solution for managing shared database development.

I am documenting here the process I went though to set up such an environment with a set of sample databases. For simplicity sake, I have only created very basic database structure and not used any complex models.

The Docker engine for Windows can be run on Windows Server 2016 native containers and with Hyper-V enabled on Windows 10 Pro or Enterprise editions.

For the purpose of this documentation work, I am running Docker on my Windows 10 Pro machine with Hyper V enabled and my development environment is based on SQL Server 2016 Developer Edition.

So first I downloaded the Docker Image for SQL Server 2016 Developer from Docker Hub as follows:

The database development environment consist of a database that represent the Trunk branch (the production version) of the sample database application which I call APP1DB_Trunk.

In order to create a Docker image for this database I took copies of the .MDF and .LDF files of the existing APP1DB database. These files I stored locally in a folder named D:\PalProjects\Docker\sqldb1. Then in the same folder I created a text file named Dockerfile where the docker image instructions are placed as follows:

The FROM command instructs docker to use the SQL Server 2016 image which includes its dependant Windows Server Core. Then it runs a PowerShell command to create a new folder named SQLServer on the container's C: drive to which it copies the .MDF and .LDF database files from the local folder. It then sets two environment variables for the SA password and end user licence acceptance indicator. At present I have only intend to use SQL Authentication with SA user to connect to the SQL Server container.

Next, I change directory (CD) to the folder where I have placed the Dockerfile and the database files and ran the following to create the docker image of the database Trunk branch.

-t parameter indicates the tag name given to the image. I have used a naming convention as follows:

<client id>/<database name>_<branch>:<database version>-<sql server version>

As such my client ID is 1, database name is APP1DB, branch is Trunk, database version is 1.1 and SQL Server version is 2016. This is the naming convention I have used in this sample, but you may follow your own convention. Also note that docker image names must be all in lower case.

When this is completes, I check the docker image list with the following command:

This lists all the images present in my repository as depicted below:

Now when this image is launched into a container, the instructions in the Dockerfile gets processed and the database files gets copied over from the current host directory (C:\PalProjects\Docker\sqldb1) into the C:\SQLServer directory which gets created inside the container via the PowerShell mkdir command. When the container starts, an instance of the SQL Server 2016 should be running inside the container. However, the Dockerfile does not have any instructions to actually attach the .mdf and .ldf files to a database. This will be given as a parameter in the following command which launches the container from the image:

This command launches the container from the clt1/app1db_trunk:1.1-2016 image given in the last parameter above. The --name parameter assigns the name APP1DB_dev to the container. -d launches the container in detached mode. -p maps a port number from the image host (left side of the colon) to the image container (right side of the colon). As mentioned earlier, the Dockerfile did not have any instructions for attaching the .mdf and .ldf database files to create a database within the container. This is achieved by an -e parameter which sets the environment variable attach_dbs. The parameter value is a JSON string containing key/value pairs for the database name (dbName), and the database file and log file name (dbFiles) in a two element array.

Any number of containers can now be launched from the single image. In addition to the container named APP1DB_dev which I launched for development, I had also launched a second container for testing with the same command only with the --name parameter changed to APP1DB_uat.

To check what containers are currently runinng, issue the following command:

The currently running containers are listed as depicted below:

The containers configuration can be examined by using the docker inspect commandL

This will show the entire configuration as a JSON object. However for the purpose of being able to connect to the SQL Server instance running inside the container, I only want to know the IP Address that docker has assigned to the container. The following parameter can be used to filter the specific JSON item within the configuration:

The above parameter will show the IP Address docker has assigned to the container.

Note:- An important point to note is that the IP Address displayed above is only visible within the host environment. This means it cannot be accessed from outside the host from the LAN. However it is possible to configure the containers network to allow docker to use the LAN's IP address range when assigning an address to the container.

For this documentation, I have not gone into this network configuration and had limited the activity to the host environment only. Visit the Docker reference documentation website at https://docs.docker.com/engine/reference/run/ for detailed information on this topic. I shall update this document at a later date with how to configure container network to use the LAN's IP address range.

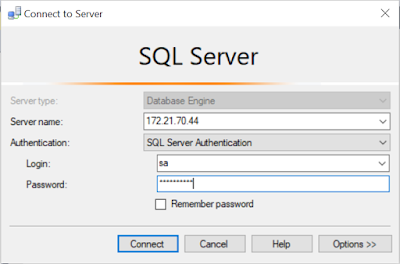

So with that said, to connect to the SQL Server instance within the above containers, launch SQL Server Management Studio and connect as follows :

After successfully connecting, it will be possible to see the database that was attached within the Object Explorer :

Having connected to the database, I then added some data to TABLE2 like so:

Now I kept the best part of docker containers to the last. As I said at the beginning, when developing and testing in a shared database environment, there is often a need to have the database in a specific state to conduct a specific test. Perhaps to run in lengthy ETL flow that could take several hours or days. This can be very tricky to co-ordinate with other developers and testers. You can always launch a new container from the trunk image, but that defeats the point of being able to have the database in a state (mostly due to loosing the test data) appropriate for the test.

So before being able to commit a running container, it must be stopped. Now it appears that this is only a limitation with Windows and does not apply to Unix platforms. Hopefully this is something that would be addressed in the future. To stop the running container issue the following command:

With the container stopped, its current state can be committed to a new image with the following command:

This creates a new image by the name clt1/app1db_dev_test2.1.2-2016. As per my naming convention this referrers to a development branch of the APP1DB database used specifically for the test2. Since I could have also added any new objects such as tables, views stored procedures, I have increased the version to 1.2. The -2016 still referrers to the SQL Server 2016 version.

The new image can be seen in the repository with the 'docker image ls' command as used previously. This image now contains all the changes that was made so far in the development branch under the APP1DB_dev container. Now a new container can be launched from the newly committed image with the following command:

This launched the new container with the name APP1DB_dev_test2. I then examine its IP address in order to connect to the SQL Server instance as follows:

I can now connect to this IP Address with SQL Server Management Studio and run the required tests in isolation. The following shows the new rows that I added to the development branch container before committing its image.

Those rows can now be seen in the new container and I can now make any changes to this container APP1DB_dev_test2, without effecting the other development branch container APP1DB_dev.

0 comments:

Post a Comment